Digit MINST

Repo link

Here you can find all my code : https://github.com/AdrienTriquet/IA1

Introduction

This lab is a classic to begin using CNN and tensorflow : the MINST digit recognition.

After the build of the network, our purpose was to see the impact of different parameters in the results and to of course understand why.

The parameters

-

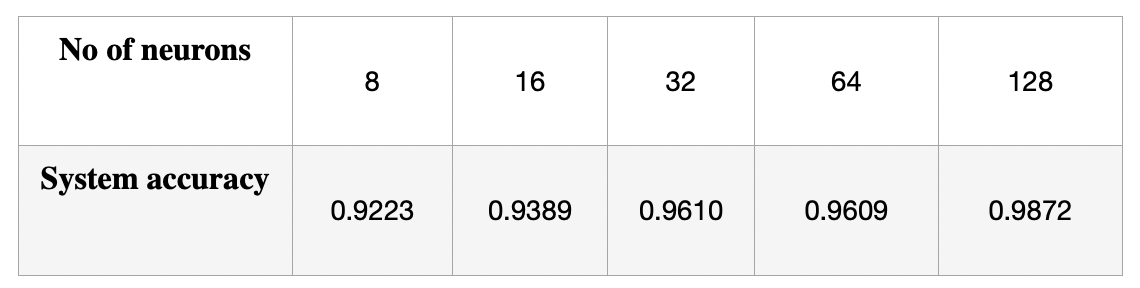

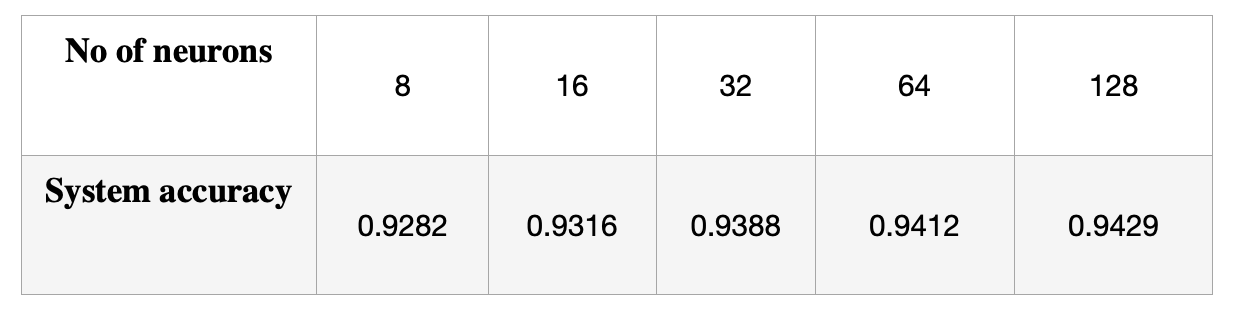

Number of neurons

Here are the results for 5 epochs and the batch size equal to 32 to determine the impact of the number of neurons in the input dense layer :

I saw clearly that the more neurons you have, the longer it takes to compute. This is logical but the difference is really big. However, the convergence is far faster, with 2 epochs and 128 neurons we have approximately the same accuracy as 5 epochs and 8 neurons.

-

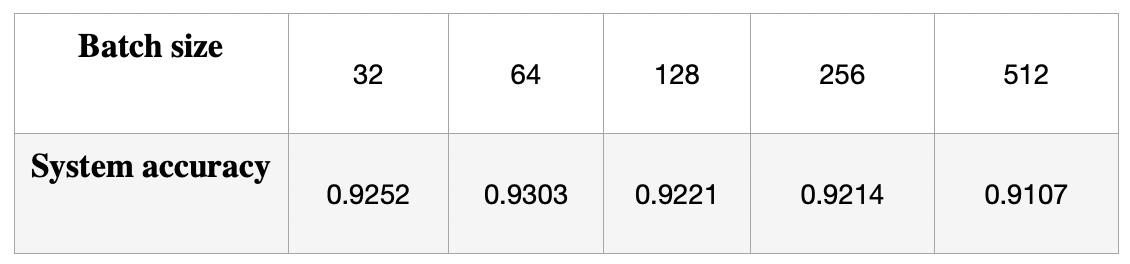

The batch size

We observe that the accuracy clearly stay almost constant. The batch size does not change the accuracy as all our samples are analyst, simply with bigger or smaller batches.

-

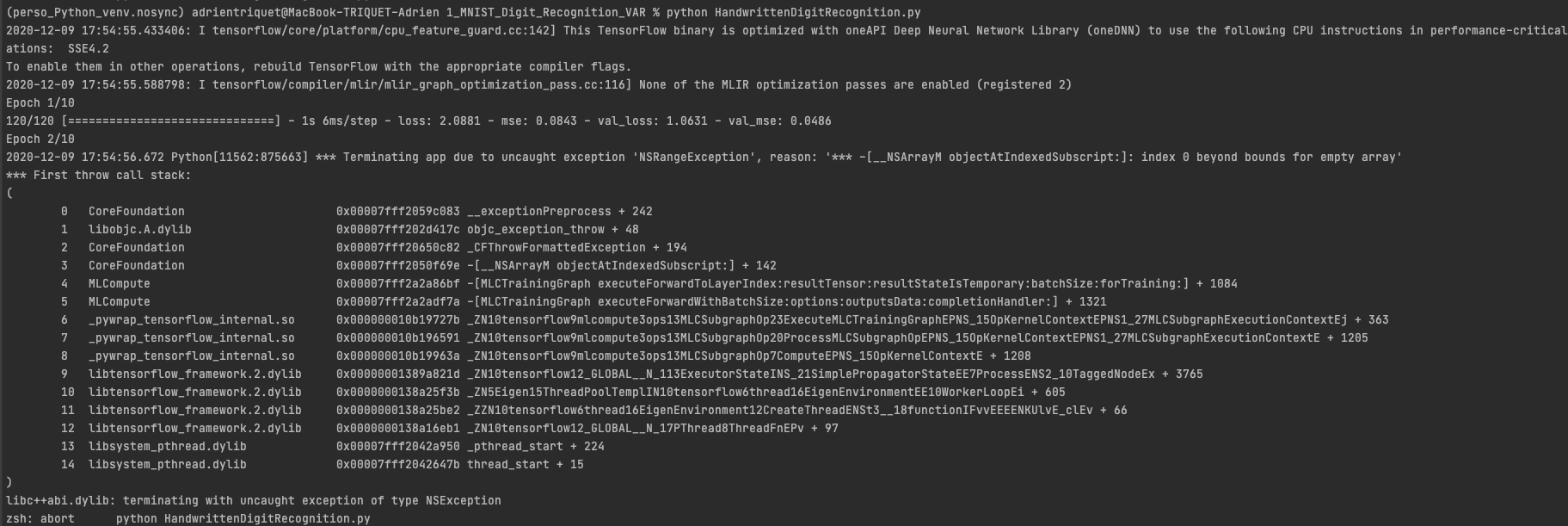

The metric

My results for the accuracy were around 0.93 for the ‘accuracy’ metrics. However, it crashed for the ‘mse’ right after the end of the first epoch, whatever I tried.

We still can see that after the first epochs, the mse is around 0.04 so far less than before.

-

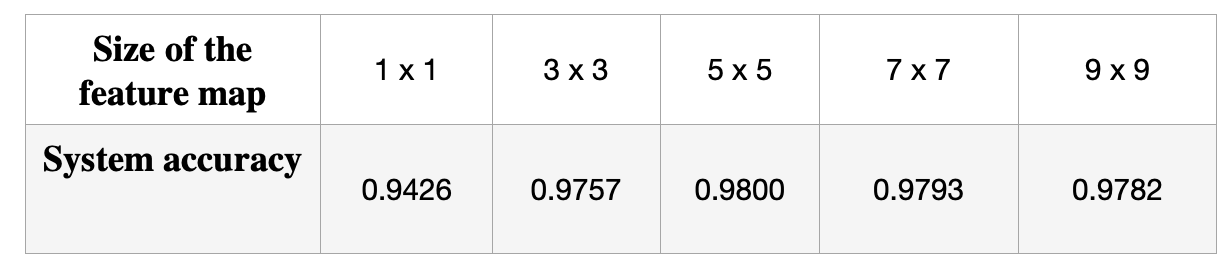

Feature map

For those next results, we used the second application with a deeper and more complex CNN.

We see that the bigger it is, the higher and faster the accuracy gets. However, we reach a plateau at a point where there is no need to go higher.

-

Neurons again

The conclusion is the same as before.

-

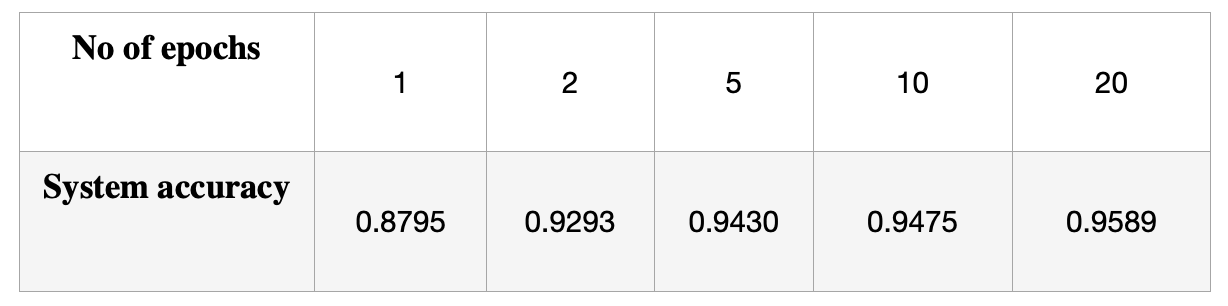

Epochs

With 8 filters in the convolution layer and 128 neurons, I got :

The system accuracy keeps climbing.