Fashion MINST

Repo link

Here you can find all my code : https://github.com/AdrienTriquet/IA2

Introduction

This lab is a classic to begin using CNN and tensorflow : the MINST fashion recognition. It is a little more complex than the digit one.

Once again, let’s see the impact of different parameters in this new CNN.

Note : you better have a good computer to compute all those network. It can take very long.

Parameters

-

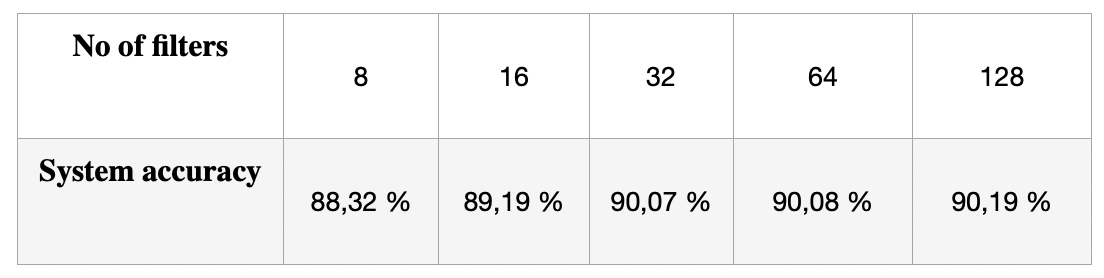

Number of filters

For 5 epochs I got :

The more filters there are in the system, the more accurate you are at the end. However, it takes much more time to compute. Still, you converge in fewer epochs.

-

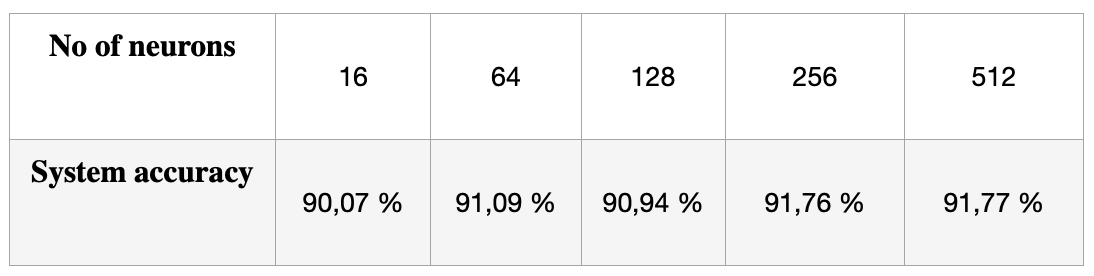

Neurons

We can see that the more neurons there are, the longer it takes to compute, but the more accurate you are, even if there is a plateau : at a point it becomes useless.

-

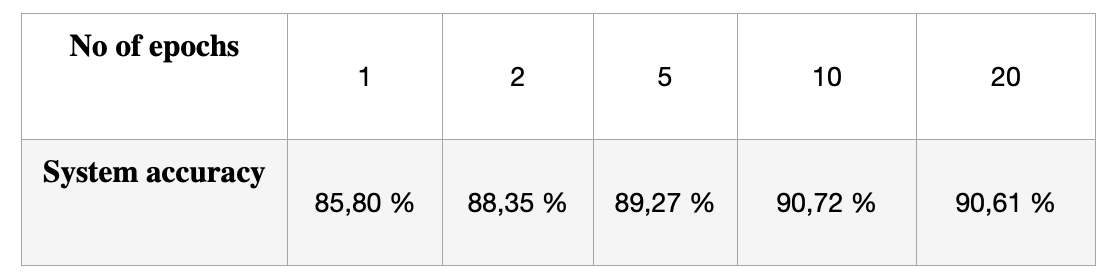

Epochs

We can see that with too many epochs, the model overfits on the data, so the recognition on other data becomes less accurate.

-

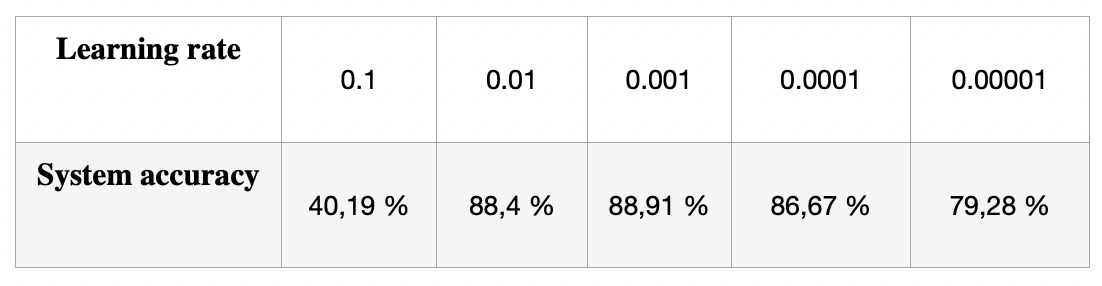

Learning rate

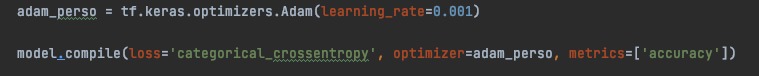

I used this function to implement a modified optimizer.

We see here that the convergence time and the accuracy is empirical to our project, values have to be tested to find the best one for the model.

-

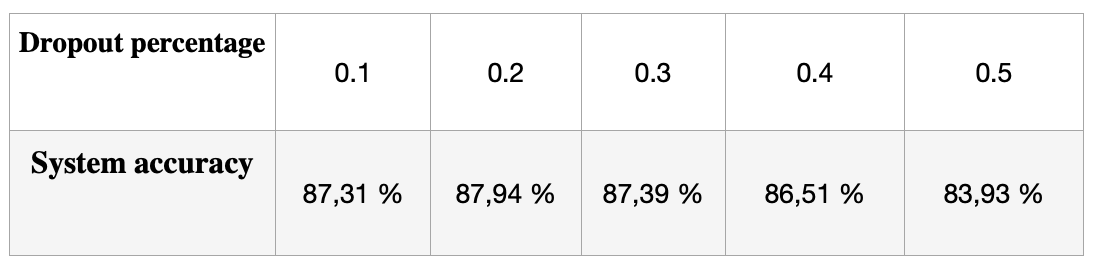

dropout

Let’s see how adding a dropout layer modifies the results. With 5 epochs I got :

The convergence speed was not significantly modified to my observation. Actually, the results are close because the model does not overfit on so few epochs.

-

Padding ?

How does the use of the padding modify the results ? For 5 epochs, 32 neurons and 32 filters, I had 87,91 % of accuracy. With the padding, it goes to 90,45 %.

With 64 filters this time, it was at : 89,35 %. With the padding, it goes to 90,84 %. The gain is far less important.

Empirical best parameters

From the former tables, we have as optimal parameters :

- Number of filters : 32 (to keep correct computational time)

- Size of the convolution kernel : 3*3 (former lab)

- Number of neurons : 256 (to keep correct computational time)

- Number of epochs : 10

- Learning rate : 0.01