Recurrent neural network

Colab link

Here you can find all my code : https://colab.research.google.com/drive/1Ott9Odhu1P6uQP186DWs3ApiEGlzC21i#scrollTo=DvwJIcmOKjOQ

Introduction

After several classes and personal work on CNN which analyse on data after another, this class presents RNN which will enable to analyse for instance a video as such, not simply as several frames. Indeed, RNN can learn on sequential database.

It is used du to limitation of the FFNN (Feed Forward Neural Network) which could only use fixed size windows. However, increasing the size of this window might cause issues with the labels complexity.

Therefore, we use RNN. They can deal with variable length vectors, preservation of order, and the tracking of long-term dependencies.

Theory

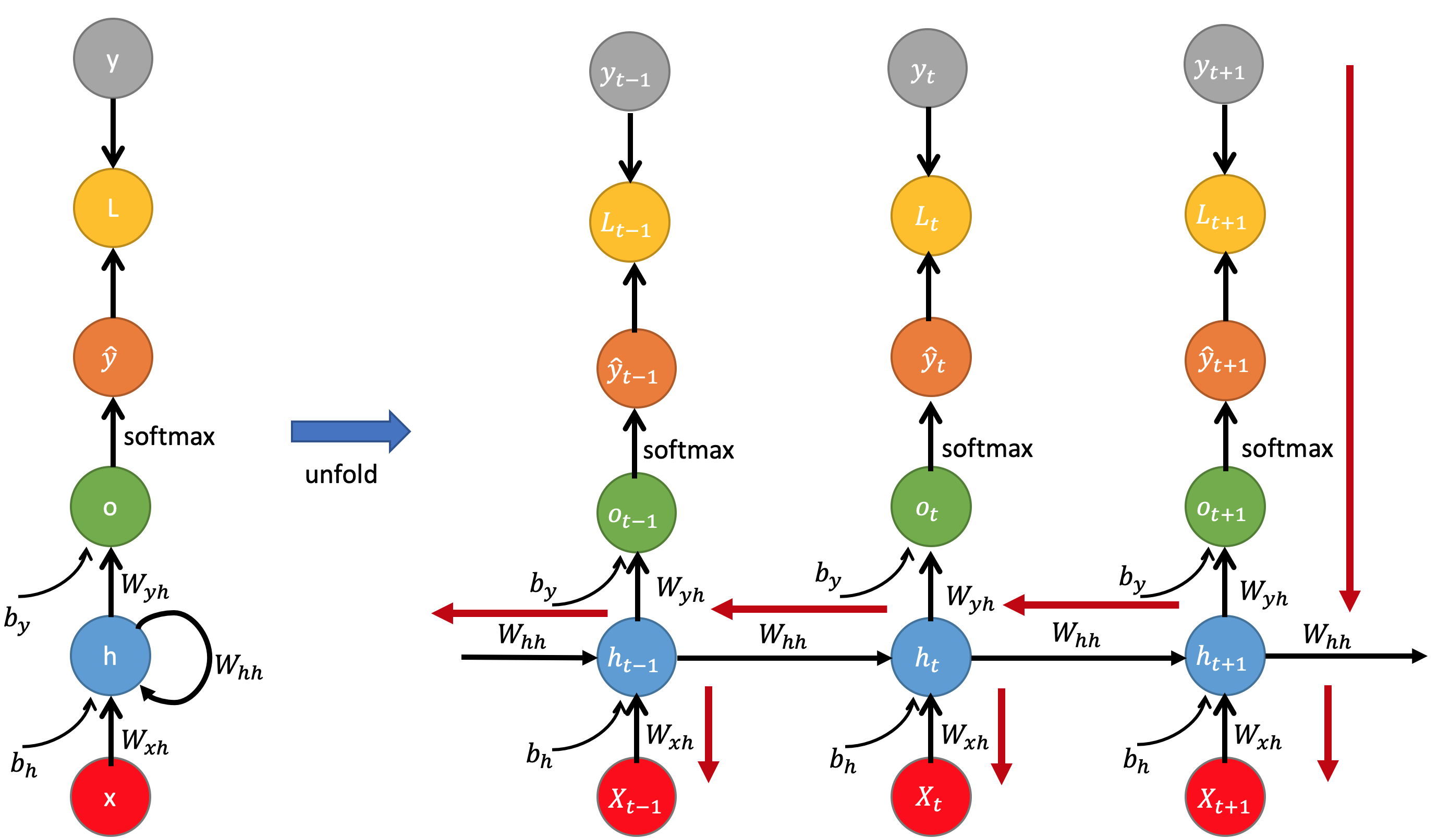

For the theory, I think I will remember that the way the calculous and the backpropagation are made are quite similar to feed forward one I already know. However, as we go back, we calculate again the gradient for the past states.

A first issue with those models are the size of the data which are not known before. Moreover, the meaning can change accordingly to this size.

Common RNN

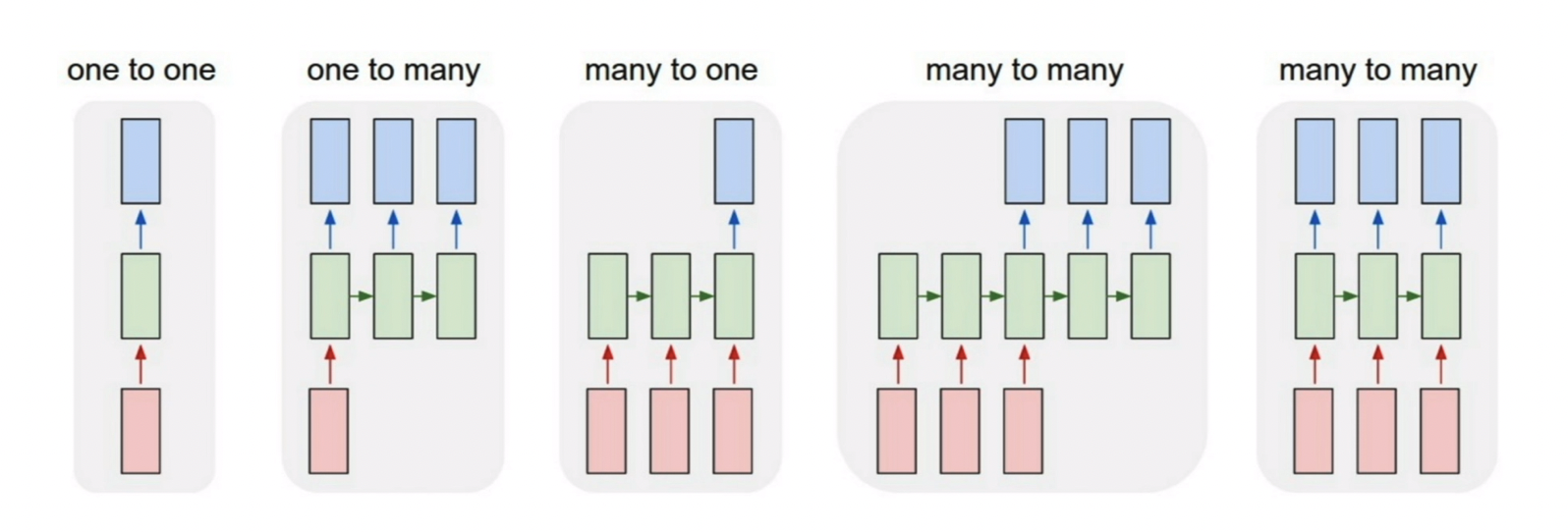

- Vanilla RNN This is the appelation for different kind of ‘basic’ networks that can be used by considering different type of input and output. You have them summarized here :

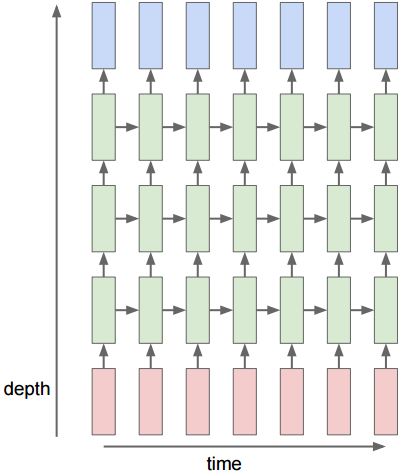

(red are input, green are the network and bleu the output)

(red are input, green are the network and bleu the output)

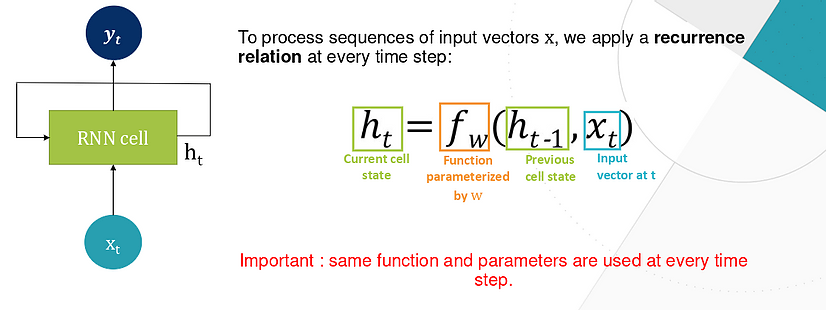

The network is based on one formula at every step :

Then, the ‘training’ is done by improving the parameters (weights like for CNN) thanks to backpropagation :

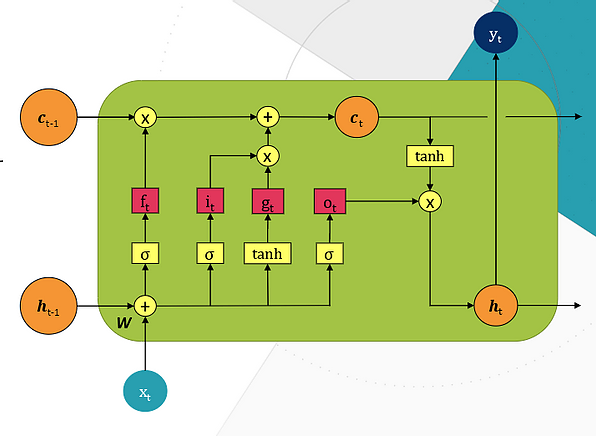

- LSTM (Long short term memory) : LSTM are a different type of RNN. It is close to how works a Vanilla RNN, however, the cells and the gradient are manage a different way.

It has two internal hidden states :

- Hidden state (short term)

- Cell state (long term)

It works with four gates to control information of the two states :

- Forget gate : manage the information that should be forgot or kept from previous states.

- Input gate : manage values to write on the current cell

- Input modulation gate : manage how much to write to the current cell

- Output gate : magane the output from the current cell

Application

Most of the time, multilayers RNN (deep RNN) are used.

Note : however, you don’t add several layers to RNN like you do to CNN. You can use features out of one RNN layer to input another one, but we rarely do it more that three times. It gives this :

RNN are used in :

- Language modelling

- Image generation

- Sequance to sequence (translation for instance)

- Inplicit / explicit attention (decision taken thanks to past information)

- Image captioning

Lab

At first, as always, we have to import the data and process it. Nothing new here, we create a dictonary with the images associated to their labels. Then we do a pre processing to remove potential issues and to give relevant type of data as input.

Then, in order to extract features from the data and to give them as input in the RNN (which cannot deal with a picture for instance). This CNN becomes then our encoder of features. We use state of the art models pretrained on imagenet. Tensorflow enables this easily.

The interesting part arrives now : the RNN !

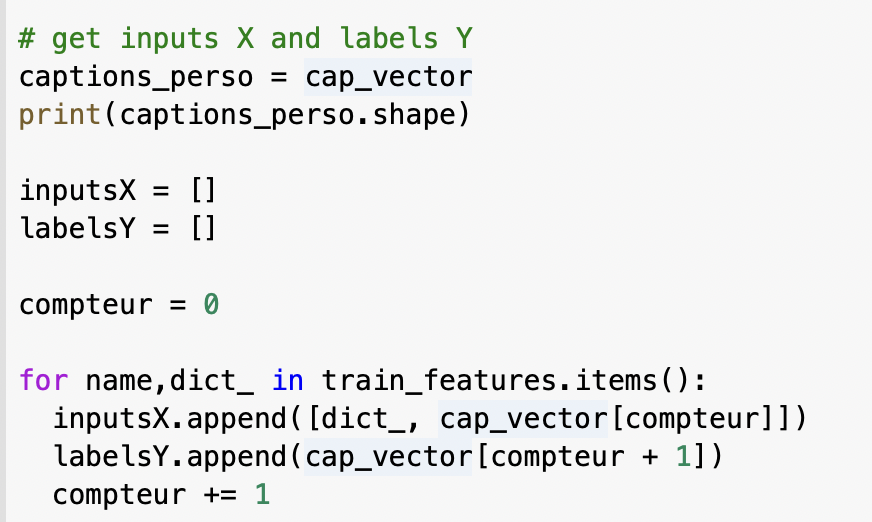

The main issue I face was to understand the object we were dealing with. Indeed, it took me long to arrive to a first solution :

However, I was facing many shape issues. I then understood that I was not taking the good value in the ‘cap_vector’. Moreover, I had to implement a better ‘shifting’ because each cap value is shifted from the one after.

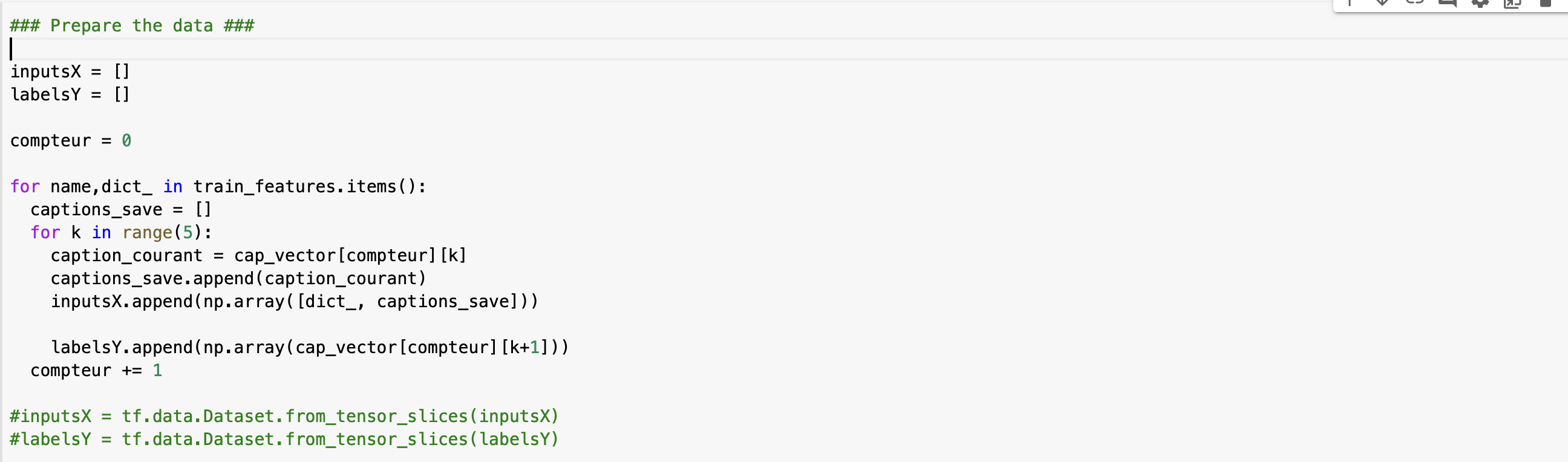

I arrived to this solution :

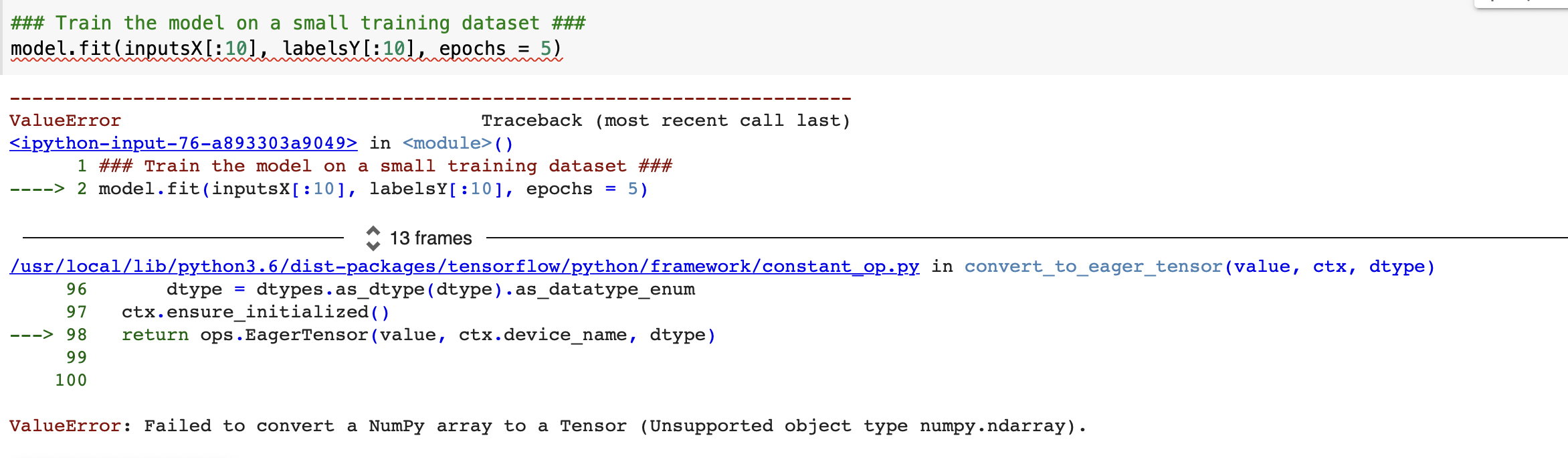

However, I never managed to deal with the many type issues I got, even with the tf.data functions :

it is quite frustrating as I must be quite close to it.